Compare commits

2 commits

e4fa572097

...

f319178b79

| Author | SHA1 | Date | |

|---|---|---|---|

| f319178b79 | |||

| 9b9bcd1487 |

486 changed files with 36800 additions and 5 deletions

278

jupyter/base/values/values.yaml

Normal file

278

jupyter/base/values/values.yaml

Normal file

|

|

@ -0,0 +1,278 @@

|

||||||

|

hub:

|

||||||

|

config:

|

||||||

|

Authenticator:

|

||||||

|

auto_login: true

|

||||||

|

enable_auth_state: true

|

||||||

|

JupyterHub:

|

||||||

|

tornado_settings:

|

||||||

|

headers: { 'Content-Security-Policy': "frame-ancestors *;" }

|

||||||

|

extraConfig:

|

||||||

|

oauthCode: |

|

||||||

|

import json

|

||||||

|

import os

|

||||||

|

import socket

|

||||||

|

from collections import Mapping

|

||||||

|

from functools import lru_cache

|

||||||

|

from urllib.parse import urlencode, urlparse

|

||||||

|

|

||||||

|

import requests

|

||||||

|

import tornado.options

|

||||||

|

import yaml

|

||||||

|

from jupyterhub.services.auth import HubAuthenticated

|

||||||

|

from jupyterhub.utils import url_path_join

|

||||||

|

from oauthenticator.generic import GenericOAuthenticator

|

||||||

|

from tornado.httpclient import AsyncHTTPClient, HTTPRequest

|

||||||

|

from tornado.httpserver import HTTPServer

|

||||||

|

from tornado.ioloop import IOLoop

|

||||||

|

from tornado.log import app_log

|

||||||

|

from tornado.web import Application, HTTPError, RequestHandler, authenticated

|

||||||

|

|

||||||

|

|

||||||

|

def post_auth_hook(authenticator, handler, authentication):

|

||||||

|

user = authentication['auth_state']['oauth_user']['ocs']['data']['id']

|

||||||

|

auth_state = authentication['auth_state']

|

||||||

|

authenticator.user_dict[user] = auth_state

|

||||||

|

return authentication

|

||||||

|

|

||||||

|

|

||||||

|

class NextcloudOAuthenticator(GenericOAuthenticator):

|

||||||

|

|

||||||

|

def __init__(self, *args, **kwargs):

|

||||||

|

super().__init__(*args, **kwargs)

|

||||||

|

self.user_dict = {}

|

||||||

|

|

||||||

|

def pre_spawn_start(self, user, spawner):

|

||||||

|

super().pre_spawn_start(user, spawner)

|

||||||

|

access_token = self.user_dict[user.name]['access_token']

|

||||||

|

# refresh_token = self.user_dict[user.name]['refresh_token']

|

||||||

|

spawner.environment['NEXTCLOUD_ACCESS_TOKEN'] = access_token

|

||||||

|

|

||||||

|

|

||||||

|

c.JupyterHub.authenticator_class = NextcloudOAuthenticator

|

||||||

|

c.NextcloudOAuthenticator.client_id = os.environ['NEXTCLOUD_CLIENT_ID']

|

||||||

|

c.NextcloudOAuthenticator.client_secret = os.environ['NEXTCLOUD_CLIENT_SECRET']

|

||||||

|

c.NextcloudOAuthenticator.login_service = 'Sunet Drive'

|

||||||

|

c.NextcloudOAuthenticator.username_key = lambda r: r.get('ocs', {}).get(

|

||||||

|

'data', {}).get('id')

|

||||||

|

c.NextcloudOAuthenticator.userdata_url = 'https://' + os.environ[

|

||||||

|

'NEXTCLOUD_HOST'] + '/ocs/v2.php/cloud/user?format=json'

|

||||||

|

c.NextcloudOAuthenticator.authorize_url = 'https://' + os.environ[

|

||||||

|

'NEXTCLOUD_HOST'] + '/index.php/apps/oauth2/authorize'

|

||||||

|

c.NextcloudOAuthenticator.token_url = 'https://' + os.environ[

|

||||||

|

'NEXTCLOUD_HOST'] + '/index.php/apps/oauth2/api/v1/token'

|

||||||

|

c.NextcloudOAuthenticator.oauth_callback_url = 'https://' + os.environ[

|

||||||

|

'JUPYTER_HOST'] + '/hub/oauth_callback'

|

||||||

|

c.NextcloudOAuthenticator.refresh_pre_spawn = True

|

||||||

|

c.NextcloudOAuthenticator.enable_auth_state = True

|

||||||

|

c.NextcloudOAuthenticator.post_auth_hook = post_auth_hook

|

||||||

|

|

||||||

|

|

||||||

|

# memoize so we only load config once

|

||||||

|

@lru_cache()

|

||||||

|

def _load_config():

|

||||||

|

"""Load configuration from disk

|

||||||

|

Memoized to only load once

|

||||||

|

"""

|

||||||

|

cfg = {}

|

||||||

|

for source in ('config', 'secret'):

|

||||||

|

path = f"/etc/jupyterhub/{source}/values.yaml"

|

||||||

|

if os.path.exists(path):

|

||||||

|

print(f"Loading {path}")

|

||||||

|

with open(path) as f:

|

||||||

|

values = yaml.safe_load(f)

|

||||||

|

cfg = _merge_dictionaries(cfg, values)

|

||||||

|

else:

|

||||||

|

print(f"No config at {path}")

|

||||||

|

return cfg

|

||||||

|

|

||||||

|

|

||||||

|

def _merge_dictionaries(a, b):

|

||||||

|

"""Merge two dictionaries recursively.

|

||||||

|

Simplified From https://stackoverflow.com/a/7205107

|

||||||

|

"""

|

||||||

|

merged = a.copy()

|

||||||

|

for key in b:

|

||||||

|

if key in a:

|

||||||

|

if isinstance(a[key], Mapping) and isinstance(b[key], Mapping):

|

||||||

|

merged[key] = _merge_dictionaries(a[key], b[key])

|

||||||

|

else:

|

||||||

|

merged[key] = b[key]

|

||||||

|

else:

|

||||||

|

merged[key] = b[key]

|

||||||

|

return merged

|

||||||

|

|

||||||

|

|

||||||

|

def get_config(key, default=None):

|

||||||

|

"""

|

||||||

|

Find a config item of a given name & return it

|

||||||

|

Parses everything as YAML, so lists and dicts are available too

|

||||||

|

get_config("a.b.c") returns config['a']['b']['c']

|

||||||

|

"""

|

||||||

|

value = _load_config()

|

||||||

|

# resolve path in yaml

|

||||||

|

for level in key.split('.'):

|

||||||

|

if not isinstance(value, dict):

|

||||||

|

# a parent is a scalar or null,

|

||||||

|

# can't resolve full path

|

||||||

|

return default

|

||||||

|

if level not in value:

|

||||||

|

return default

|

||||||

|

else:

|

||||||

|

value = value[level]

|

||||||

|

return value

|

||||||

|

|

||||||

|

|

||||||

|

async def fetch_new_token(token_url, client_id, client_secret, refresh_token):

|

||||||

|

params = {

|

||||||

|

"grant_type": "refresh_token",

|

||||||

|

"client_id": client_id,

|

||||||

|

"client_secret": client_secret,

|

||||||

|

"refresh_token": refresh_token,

|

||||||

|

}

|

||||||

|

body = urlencode(params)

|

||||||

|

req = HTTPRequest(token_url, 'POST', body=body)

|

||||||

|

app_log.error("URL: %s body: %s", token_url, body)

|

||||||

|

|

||||||

|

client = AsyncHTTPClient()

|

||||||

|

resp = await client.fetch(req)

|

||||||

|

|

||||||

|

resp_json = json.loads(resp.body.decode('utf8', 'replace'))

|

||||||

|

return resp_json

|

||||||

|

|

||||||

|

|

||||||

|

class TokenHandler(HubAuthenticated, RequestHandler):

|

||||||

|

|

||||||

|

def api_request(self, method, url, **kwargs):

|

||||||

|

"""Make an API request"""

|

||||||

|

url = url_path_join(self.hub_auth.api_url, url)

|

||||||

|

allow_404 = kwargs.pop('allow_404', False)

|

||||||

|

headers = kwargs.setdefault('headers', {})

|

||||||

|

headers.setdefault('Authorization',

|

||||||

|

'token %s' % self.hub_auth.api_token)

|

||||||

|

try:

|

||||||

|

r = requests.request(method, url, **kwargs)

|

||||||

|

except requests.ConnectionError as e:

|

||||||

|

app_log.error("Error connecting to %s: %s", url, e)

|

||||||

|

msg = "Failed to connect to Hub API at %r." % url

|

||||||

|

msg += " Is the Hub accessible at this URL (from host: %s)?" % socket.gethostname(

|

||||||

|

)

|

||||||

|

if '127.0.0.1' in url:

|

||||||

|

msg += " Make sure to set c.JupyterHub.hub_ip to an IP accessible to" + \

|

||||||

|

" single-user servers if the servers are not on the same host as the Hub."

|

||||||

|

raise HTTPError(500, msg)

|

||||||

|

|

||||||

|

data = None

|

||||||

|

if r.status_code == 404 and allow_404:

|

||||||

|

pass

|

||||||

|

elif r.status_code == 403:

|

||||||

|

app_log.error(

|

||||||

|

"I don't have permission to check authorization with JupyterHub, my auth token may have expired: [%i] %s",

|

||||||

|

r.status_code, r.reason)

|

||||||

|

app_log.error(r.text)

|

||||||

|

raise HTTPError(

|

||||||

|

500,

|

||||||

|

"Permission failure checking authorization, I may need a new token"

|

||||||

|

)

|

||||||

|

elif r.status_code >= 500:

|

||||||

|

app_log.error("Upstream failure verifying auth token: [%i] %s",

|

||||||

|

r.status_code, r.reason)

|

||||||

|

app_log.error(r.text)

|

||||||

|

raise HTTPError(

|

||||||

|

502, "Failed to check authorization (upstream problem)")

|

||||||

|

elif r.status_code >= 400:

|

||||||

|

app_log.warning("Failed to check authorization: [%i] %s",

|

||||||

|

r.status_code, r.reason)

|

||||||

|

app_log.warning(r.text)

|

||||||

|

raise HTTPError(500, "Failed to check authorization")

|

||||||

|

else:

|

||||||

|

data = r.json()

|

||||||

|

|

||||||

|

return data

|

||||||

|

|

||||||

|

@authenticated

|

||||||

|

async def get(self):

|

||||||

|

oauth_config = get_config('auth.custom.config')

|

||||||

|

|

||||||

|

client_id = oauth_config['client_id']

|

||||||

|

client_secret = oauth_config['client_secret']

|

||||||

|

token_url = oauth_config['token_url']

|

||||||

|

user_model = self.get_current_user()

|

||||||

|

|

||||||

|

# Fetch current auth state

|

||||||

|

u = self.api_request('GET', url_path_join('users', user_model['name']))

|

||||||

|

app_log.error("User: %s", u)

|

||||||

|

auth_state = u['auth_state']

|

||||||

|

|

||||||

|

new_tokens = await fetch_new_token(token_url, client_id, client_secret,

|

||||||

|

auth_state.get('refresh_token'))

|

||||||

|

|

||||||

|

# update auth state in the hub

|

||||||

|

auth_state['access_token'] = new_tokens['access_token']

|

||||||

|

auth_state['refresh_token'] = new_tokens['refresh_token']

|

||||||

|

self.api_request('PATCH',

|

||||||

|

url_path_join('users', user_model['name']),

|

||||||

|

data=json.dumps({'auth_state': auth_state}))

|

||||||

|

|

||||||

|

# send new token to the user

|

||||||

|

tokens = {'access_token': auth_state.get('access_token')}

|

||||||

|

self.set_header('content-type', 'application/json')

|

||||||

|

self.write(json.dumps(tokens, indent=1, sort_keys=True))

|

||||||

|

|

||||||

|

|

||||||

|

class PingHandler(RequestHandler):

|

||||||

|

|

||||||

|

def get(self):

|

||||||

|

self.set_header('content-type', 'application/json')

|

||||||

|

self.write(json.dumps({'ping': 1}))

|

||||||

|

|

||||||

|

|

||||||

|

def main():

|

||||||

|

tornado.options.parse_command_line()

|

||||||

|

app = Application([

|

||||||

|

(os.environ['JUPYTERHUB_SERVICE_PREFIX'] + 'tokens', TokenHandler),

|

||||||

|

(os.environ['JUPYTERHUB_SERVICE_PREFIX'] + '/?', PingHandler),

|

||||||

|

])

|

||||||

|

|

||||||

|

http_server = HTTPServer(app)

|

||||||

|

url = urlparse(os.environ['JUPYTERHUB_SERVICE_URL'])

|

||||||

|

|

||||||

|

http_server.listen(url.port)

|

||||||

|

|

||||||

|

IOLoop.current().start()

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == '__main__':

|

||||||

|

main()

|

||||||

|

extraEnv:

|

||||||

|

NEXTCLOUD_HOST: sunet.drive.test.sunet.se

|

||||||

|

JUPYTER_HOST: jupyter.drive.test.sunet.se

|

||||||

|

NEXTCLOUD_CLIENT_ID:

|

||||||

|

valueFrom:

|

||||||

|

secretKeyRef:

|

||||||

|

name: nextcloud-oauth-secrets

|

||||||

|

key: client-id

|

||||||

|

NEXTCLOUD_CLIENT_SECRET:

|

||||||

|

valueFrom:

|

||||||

|

secretKeyRef:

|

||||||

|

name: nextcloud-oauth-secrets

|

||||||

|

key: client-secret

|

||||||

|

singleuser:

|

||||||

|

image:

|

||||||

|

name: docker.sunet.se/drive/jupyter-custom

|

||||||

|

tag: 2023-02-28-2

|

||||||

|

storage:

|

||||||

|

type: none

|

||||||

|

extraEnv:

|

||||||

|

JUPYTER_ENABLE_LAB: "yes"

|

||||||

|

extraFiles:

|

||||||

|

jupyter_notebook_config:

|

||||||

|

mountPath: /home/jovyan/.jupyter/jupyter_server_config.py

|

||||||

|

stringData: |

|

||||||

|

import os

|

||||||

|

c = get_config()

|

||||||

|

c.NotebookApp.allow_origin = '*'

|

||||||

|

c.NotebookApp.tornado_settings = {

|

||||||

|

'headers': { 'Content-Security-Policy': "frame-ancestors *;" }

|

||||||

|

}

|

||||||

|

os.system('/usr/local/bin/nc-sync')

|

||||||

|

mode: 0644

|

||||||

48

rds/base/charts/all/Chart.lock

Normal file

48

rds/base/charts/all/Chart.lock

Normal file

|

|

@ -0,0 +1,48 @@

|

||||||

|

dependencies:

|

||||||

|

- name: layer0-describo

|

||||||

|

repository: file://../layer0_describo

|

||||||

|

version: 0.2.9

|

||||||

|

- name: layer0-web

|

||||||

|

repository: file://../layer0_web

|

||||||

|

version: 0.3.3

|

||||||

|

- name: layer0-helper-describo-token-updater

|

||||||

|

repository: file://../layer0_helper_describo_token_updater

|

||||||

|

version: 0.2.1

|

||||||

|

- name: layer1-port-openscienceframework

|

||||||

|

repository: file://../layer1_port_openscienceframework

|

||||||

|

version: 0.2.3

|

||||||

|

- name: layer1-port-zenodo

|

||||||

|

repository: file://../layer1_port_zenodo

|

||||||

|

version: 0.2.2

|

||||||

|

- name: layer1-port-owncloud

|

||||||

|

repository: file://../layer1_port_owncloud

|

||||||

|

version: 0.3.3

|

||||||

|

- name: layer1-port-reva

|

||||||

|

repository: file://../layer1_port_reva

|

||||||

|

version: 0.2.0

|

||||||

|

- name: layer2-exporter-service

|

||||||

|

repository: file://../layer2_exporter_service

|

||||||

|

version: 0.2.3

|

||||||

|

- name: layer2-port-service

|

||||||

|

repository: file://../layer2_port_service

|

||||||

|

version: 0.2.5

|

||||||

|

- name: layer2-metadata-service

|

||||||

|

repository: file://../layer2_metadata_service

|

||||||

|

version: 0.2.3

|

||||||

|

- name: layer3-token-storage

|

||||||

|

repository: file://../layer3_token_storage

|

||||||

|

version: 0.3.0

|

||||||

|

- name: layer3-research-manager

|

||||||

|

repository: file://../layer3_research_manager

|

||||||

|

version: 0.3.4

|

||||||

|

- name: jaeger

|

||||||

|

repository: file://../jaeger

|

||||||

|

version: 0.34.0

|

||||||

|

- name: redis-cluster

|

||||||

|

repository: file://../redis-cluster

|

||||||

|

version: 7.6.4

|

||||||

|

- name: redis

|

||||||

|

repository: file://../redis

|

||||||

|

version: 16.13.2

|

||||||

|

digest: sha256:643d0156dd67144f1b4fddf70d07155fbe6fc13ae31c06f0ef402f39b6580887

|

||||||

|

generated: "2023-01-16T12:41:35.854+01:00"

|

||||||

106

rds/base/charts/all/Chart.yaml

Normal file

106

rds/base/charts/all/Chart.yaml

Normal file

|

|

@ -0,0 +1,106 @@

|

||||||

|

apiVersion: v2

|

||||||

|

appVersion: "1.0"

|

||||||

|

dependencies:

|

||||||

|

- condition: layer0-describo.enabled

|

||||||

|

name: layer0-describo

|

||||||

|

repository: file://../layer0_describo

|

||||||

|

tags:

|

||||||

|

- layer0

|

||||||

|

version: ^0.2.0

|

||||||

|

- condition: layer0-web.enabled

|

||||||

|

name: layer0-web

|

||||||

|

repository: file://../layer0_web

|

||||||

|

tags:

|

||||||

|

- layer0

|

||||||

|

version: ^0.3.0

|

||||||

|

- condition: feature.redis

|

||||||

|

name: layer0-helper-describo-token-updater

|

||||||

|

repository: file://../layer0_helper_describo_token_updater

|

||||||

|

tags:

|

||||||

|

- layer0

|

||||||

|

version: ^0.2.0

|

||||||

|

- condition: layer1-port-openscienceframework.enabled

|

||||||

|

name: layer1-port-openscienceframework

|

||||||

|

repository: file://../layer1_port_openscienceframework

|

||||||

|

tags:

|

||||||

|

- layer1

|

||||||

|

version: ^0.2.0

|

||||||

|

- condition: layer1-port-zenodo.enabled

|

||||||

|

name: layer1-port-zenodo

|

||||||

|

repository: file://../layer1_port_zenodo

|

||||||

|

tags:

|

||||||

|

- layer1

|

||||||

|

version: ^0.2.0

|

||||||

|

- condition: layer1-port-owncloud.enabled

|

||||||

|

name: layer1-port-owncloud

|

||||||

|

repository: file://../layer1_port_owncloud

|

||||||

|

tags:

|

||||||

|

- layer1

|

||||||

|

version: ^0.3.0

|

||||||

|

- condition: layer1-port-reva.enabled

|

||||||

|

name: layer1-port-reva

|

||||||

|

repository: file://../layer1_port_reva

|

||||||

|

tags:

|

||||||

|

- layer1

|

||||||

|

version: ^0.2.0

|

||||||

|

- name: layer2-exporter-service

|

||||||

|

repository: file://../layer2_exporter_service

|

||||||

|

tags:

|

||||||

|

- layer2

|

||||||

|

version: ^0.2.0

|

||||||

|

- name: layer2-port-service

|

||||||

|

repository: file://../layer2_port_service

|

||||||

|

tags:

|

||||||

|

- layer2

|

||||||

|

version: ^0.2.0

|

||||||

|

- name: layer2-metadata-service

|

||||||

|

repository: file://../layer2_metadata_service

|

||||||

|

tags:

|

||||||

|

- layer2

|

||||||

|

version: ^0.2.0

|

||||||

|

- name: layer3-token-storage

|

||||||

|

repository: file://../layer3_token_storage

|

||||||

|

tags:

|

||||||

|

- layer3

|

||||||

|

version: ^0.3.0

|

||||||

|

- name: layer3-research-manager

|

||||||

|

repository: file://../layer3_research_manager

|

||||||

|

tags:

|

||||||

|

- layer3

|

||||||

|

version: ^0.3.0

|

||||||

|

- condition: feature.jaeger

|

||||||

|

name: jaeger

|

||||||

|

repository: file://../jaeger

|

||||||

|

tags:

|

||||||

|

- monitoring

|

||||||

|

version: ^0.34.0

|

||||||

|

- alias: redis

|

||||||

|

condition: feature.redis

|

||||||

|

name: redis-cluster

|

||||||

|

repository: file://../redis-cluster

|

||||||

|

tags:

|

||||||

|

- storage

|

||||||

|

version: ^7.6.1

|

||||||

|

- alias: redis-helper

|

||||||

|

condition: feature.redis

|

||||||

|

name: redis

|

||||||

|

repository: file://../redis

|

||||||

|

tags:

|

||||||

|

- storage

|

||||||

|

version: ^16.10.1

|

||||||

|

description: A single chart for installing whole sciebo rds ecosystem.

|

||||||

|

home: https://www.research-data-services.org/

|

||||||

|

icon: https://www.research-data-services.org/img/sciebo.png

|

||||||

|

keywords:

|

||||||

|

- research

|

||||||

|

- data

|

||||||

|

- services

|

||||||

|

- zenodo

|

||||||

|

maintainers:

|

||||||

|

- email: peter.heiss@uni-muenster.de

|

||||||

|

name: Heiss

|

||||||

|

name: all

|

||||||

|

sources:

|

||||||

|

- https://github.com/Sciebo-RDS/Sciebo-RDS

|

||||||

|

type: application

|

||||||

|

version: 0.2.10

|

||||||

21

rds/base/charts/all/charts/jaeger/.helmignore

Normal file

21

rds/base/charts/all/charts/jaeger/.helmignore

Normal file

|

|

@ -0,0 +1,21 @@

|

||||||

|

# Patterns to ignore when building packages.

|

||||||

|

# This supports shell glob matching, relative path matching, and

|

||||||

|

# negation (prefixed with !). Only one pattern per line.

|

||||||

|

.DS_Store

|

||||||

|

# Common VCS dirs

|

||||||

|

.git/

|

||||||

|

.gitignore

|

||||||

|

.bzr/

|

||||||

|

.bzrignore

|

||||||

|

.hg/

|

||||||

|

.hgignore

|

||||||

|

.svn/

|

||||||

|

# Common backup files

|

||||||

|

*.swp

|

||||||

|

*.bak

|

||||||

|

*.tmp

|

||||||

|

*~

|

||||||

|

# Various IDEs

|

||||||

|

.project

|

||||||

|

.idea/

|

||||||

|

*.tmproj

|

||||||

23

rds/base/charts/all/charts/jaeger/Chart.yaml

Normal file

23

rds/base/charts/all/charts/jaeger/Chart.yaml

Normal file

|

|

@ -0,0 +1,23 @@

|

||||||

|

apiVersion: v1

|

||||||

|

appVersion: 1.18.0

|

||||||

|

description: A Jaeger Helm chart for Kubernetes

|

||||||

|

home: https://jaegertracing.io

|

||||||

|

icon: https://camo.githubusercontent.com/afa87494e0753b4b1f5719a2f35aa5263859dffb/687474703a2f2f6a61656765722e72656164746865646f63732e696f2f656e2f6c61746573742f696d616765732f6a61656765722d766563746f722e737667

|

||||||

|

keywords:

|

||||||

|

- jaeger

|

||||||

|

- opentracing

|

||||||

|

- tracing

|

||||||

|

- instrumentation

|

||||||

|

maintainers:

|

||||||

|

- email: david.vonthenen@dell.com

|

||||||

|

name: dvonthenen

|

||||||

|

- email: michael.lorant@fairfaxmedia.com.au

|

||||||

|

name: mikelorant

|

||||||

|

- email: naseem@transit.app

|

||||||

|

name: naseemkullah

|

||||||

|

- email: pavel.nikolov@fairfaxmedia.com.au

|

||||||

|

name: pavelnikolov

|

||||||

|

name: jaeger

|

||||||

|

sources:

|

||||||

|

- https://hub.docker.com/u/jaegertracing/

|

||||||

|

version: 0.34.0

|

||||||

10

rds/base/charts/all/charts/jaeger/OWNERS

Normal file

10

rds/base/charts/all/charts/jaeger/OWNERS

Normal file

|

|

@ -0,0 +1,10 @@

|

||||||

|

approvers:

|

||||||

|

- dvonthenen

|

||||||

|

- mikelorant

|

||||||

|

- naseemkullah

|

||||||

|

- pavelnikolov

|

||||||

|

reviewers:

|

||||||

|

- dvonthenen

|

||||||

|

- mikelorant

|

||||||

|

- naseemkullah

|

||||||

|

- pavelnikolov

|

||||||

380

rds/base/charts/all/charts/jaeger/README.md

Normal file

380

rds/base/charts/all/charts/jaeger/README.md

Normal file

|

|

@ -0,0 +1,380 @@

|

||||||

|

# Jaeger

|

||||||

|

|

||||||

|

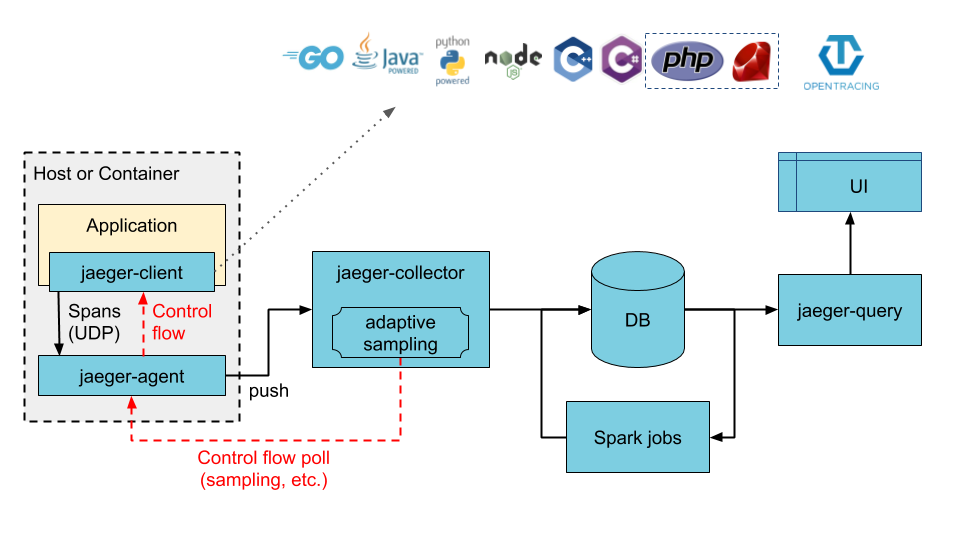

[Jaeger](https://www.jaegertracing.io/) is a distributed tracing system.

|

||||||

|

|

||||||

|

## Introduction

|

||||||

|

|

||||||

|

This chart adds all components required to run Jaeger as described in the [jaeger-kubernetes](https://github.com/jaegertracing/jaeger-kubernetes) GitHub page for a production-like deployment. The chart default will deploy a new Cassandra cluster (using the [cassandra chart](https://github.com/kubernetes/charts/tree/master/incubator/cassandra)), but also supports using an existing Cassandra cluster, deploying a new ElasticSearch cluster (using the [elasticsearch chart](https://github.com/elastic/helm-charts/tree/master/elasticsearch)), or connecting to an existing ElasticSearch cluster. Once the storage backend is available, the chart will deploy jaeger-agent as a DaemonSet and deploy the jaeger-collector and jaeger-query components as Deployments.

|

||||||

|

|

||||||

|

## Installing the Chart

|

||||||

|

|

||||||

|

Add the Jaeger Tracing Helm repository:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm repo add jaegertracing https://jaegertracing.github.io/helm-charts

|

||||||

|

```

|

||||||

|

|

||||||

|

To install the chart with the release name `jaeger`, run the following command:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger

|

||||||

|

```

|

||||||

|

|

||||||

|

By default, the chart deploys the following:

|

||||||

|

|

||||||

|

- Jaeger Agent DaemonSet

|

||||||

|

- Jaeger Collector Deployment

|

||||||

|

- Jaeger Query (UI) Deployment

|

||||||

|

- Cassandra StatefulSet

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

IMPORTANT NOTE: For testing purposes, the footprint for Cassandra can be reduced significantly in the event resources become constrained (such as running on your local laptop or in a Vagrant environment). You can override the resources required run running this command:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger \

|

||||||

|

--set cassandra.config.max_heap_size=1024M \

|

||||||

|

--set cassandra.config.heap_new_size=256M \

|

||||||

|

--set cassandra.resources.requests.memory=2048Mi \

|

||||||

|

--set cassandra.resources.requests.cpu=0.4 \

|

||||||

|

--set cassandra.resources.limits.memory=2048Mi \

|

||||||

|

--set cassandra.resources.limits.cpu=0.4

|

||||||

|

```

|

||||||

|

|

||||||

|

## Installing the Chart using an Existing Cassandra Cluster

|

||||||

|

|

||||||

|

If you already have an existing running Cassandra cluster, you can configure the chart as follows to use it as your backing store (make sure you replace `<HOST>`, `<PORT>`, etc with your values):

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger \

|

||||||

|

--set provisionDataStore.cassandra=false \

|

||||||

|

--set storage.cassandra.host=<HOST> \

|

||||||

|

--set storage.cassandra.port=<PORT> \

|

||||||

|

--set storage.cassandra.user=<USER> \

|

||||||

|

--set storage.cassandra.password=<PASSWORD>

|

||||||

|

```

|

||||||

|

|

||||||

|

## Installing the Chart using an Existing Cassandra Cluster with TLS

|

||||||

|

|

||||||

|

If you already have an existing running Cassandra cluster with TLS, you can configure the chart as follows to use it as your backing store:

|

||||||

|

|

||||||

|

Content of the `values.yaml` file:

|

||||||

|

|

||||||

|

```YAML

|

||||||

|

storage:

|

||||||

|

type: cassandra

|

||||||

|

cassandra:

|

||||||

|

host: <HOST>

|

||||||

|

port: <PORT>

|

||||||

|

user: <USER>

|

||||||

|

password: <PASSWORD>

|

||||||

|

tls:

|

||||||

|

enabled: true

|

||||||

|

secretName: cassandra-tls-secret

|

||||||

|

|

||||||

|

provisionDataStore:

|

||||||

|

cassandra: false

|

||||||

|

```

|

||||||

|

|

||||||

|

Content of the `jaeger-tls-cassandra-secret.yaml` file:

|

||||||

|

|

||||||

|

```YAML

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Secret

|

||||||

|

metadata:

|

||||||

|

name: cassandra-tls-secret

|

||||||

|

data:

|

||||||

|

commonName: <SERVER NAME>

|

||||||

|

ca-cert.pem: |

|

||||||

|

-----BEGIN CERTIFICATE-----

|

||||||

|

<CERT>

|

||||||

|

-----END CERTIFICATE-----

|

||||||

|

client-cert.pem: |

|

||||||

|

-----BEGIN CERTIFICATE-----

|

||||||

|

<CERT>

|

||||||

|

-----END CERTIFICATE-----

|

||||||

|

client-key.pem: |

|

||||||

|

-----BEGIN RSA PRIVATE KEY-----

|

||||||

|

-----END RSA PRIVATE KEY-----

|

||||||

|

cqlshrc: |

|

||||||

|

[ssl]

|

||||||

|

certfile = ~/.cassandra/ca-cert.pem

|

||||||

|

userkey = ~/.cassandra/client-key.pem

|

||||||

|

usercert = ~/.cassandra/client-cert.pem

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

```bash

|

||||||

|

kubectl apply -f jaeger-tls-cassandra-secret.yaml

|

||||||

|

helm install jaeger jaegertracing/jaeger --values values.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

## Installing the Chart using a New ElasticSearch Cluster

|

||||||

|

|

||||||

|

To install the chart with the release name `jaeger` using a new ElasticSearch cluster instead of Cassandra (default), run the following command:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger \

|

||||||

|

--set provisionDataStore.cassandra=false \

|

||||||

|

--set provisionDataStore.elasticsearch=true \

|

||||||

|

--set storage.type=elasticsearch

|

||||||

|

```

|

||||||

|

|

||||||

|

## Installing the Chart using an Existing Elasticsearch Cluster

|

||||||

|

|

||||||

|

A release can be configured as follows to use an existing ElasticSearch cluster as it as the storage backend:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger \

|

||||||

|

--set provisionDataStore.cassandra=false \

|

||||||

|

--set storage.type=elasticsearch \

|

||||||

|

--set storage.elasticsearch.host=<HOST> \

|

||||||

|

--set storage.elasticsearch.port=<PORT> \

|

||||||

|

--set storage.elasticsearch.user=<USER> \

|

||||||

|

--set storage.elasticsearch.password=<password>

|

||||||

|

```

|

||||||

|

|

||||||

|

## Installing the Chart using an Existing ElasticSearch Cluster with TLS

|

||||||

|

|

||||||

|

If you already have an existing running ElasticSearch cluster with TLS, you can configure the chart as follows to use it as your backing store:

|

||||||

|

|

||||||

|

Content of the `jaeger-values.yaml` file:

|

||||||

|

|

||||||

|

```YAML

|

||||||

|

storage:

|

||||||

|

type: elasticsearch

|

||||||

|

elasticsearch:

|

||||||

|

host: <HOST>

|

||||||

|

port: <PORT>

|

||||||

|

scheme: https

|

||||||

|

user: <USER>

|

||||||

|

password: <PASSWORD>

|

||||||

|

provisionDataStore:

|

||||||

|

cassandra: false

|

||||||

|

elasticsearch: false

|

||||||

|

query:

|

||||||

|

cmdlineParams:

|

||||||

|

es.tls.ca: "/tls/es.pem"

|

||||||

|

extraConfigmapMounts:

|

||||||

|

- name: jaeger-tls

|

||||||

|

mountPath: /tls

|

||||||

|

subPath: ""

|

||||||

|

configMap: jaeger-tls

|

||||||

|

readOnly: true

|

||||||

|

collector:

|

||||||

|

cmdlineParams:

|

||||||

|

es.tls.ca: "/tls/es.pem"

|

||||||

|

extraConfigmapMounts:

|

||||||

|

- name: jaeger-tls

|

||||||

|

mountPath: /tls

|

||||||

|

subPath: ""

|

||||||

|

configMap: jaeger-tls

|

||||||

|

readOnly: true

|

||||||

|

spark:

|

||||||

|

enabled: true

|

||||||

|

cmdlineParams:

|

||||||

|

java.opts: "-Djavax.net.ssl.trustStore=/tls/trust.store -Djavax.net.ssl.trustStorePassword=changeit"

|

||||||

|

extraConfigmapMounts:

|

||||||

|

- name: jaeger-tls

|

||||||

|

mountPath: /tls

|

||||||

|

subPath: ""

|

||||||

|

configMap: jaeger-tls

|

||||||

|

readOnly: true

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Generate configmap jaeger-tls:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

keytool -import -trustcacerts -keystore trust.store -storepass changeit -alias es-root -file es.pem

|

||||||

|

kubectl create configmap jaeger-tls --from-file=trust.store --from-file=es.pem

|

||||||

|

```

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger --values jaeger-values.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

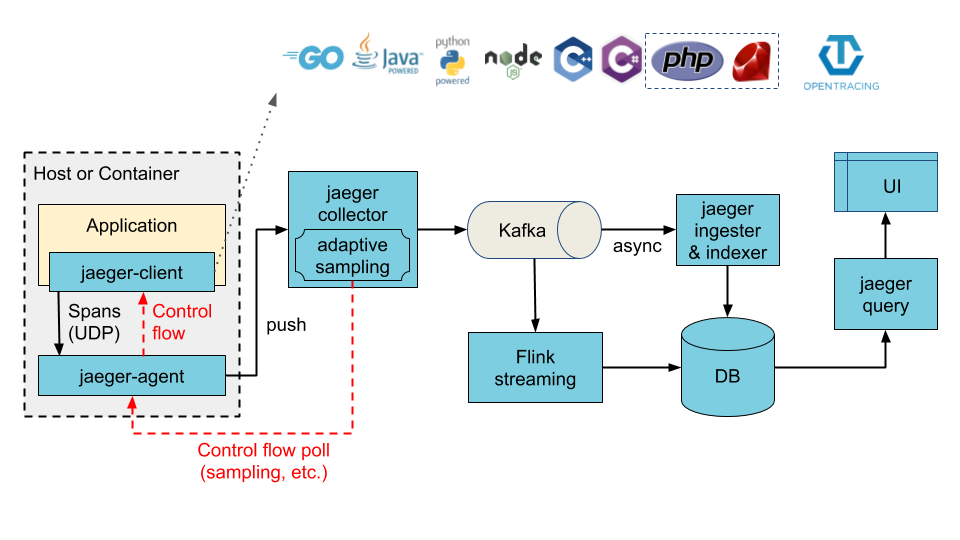

## Installing the Chart with Ingester enabled

|

||||||

|

|

||||||

|

The architecture illustrated below can be achieved by enabling the ingester component. When enabled, Cassandra or Elasticsearch (depending on the configured values) now becomes the ingester's storage backend, whereas Kafka becomes the storage backend of the collector service.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## Installing the Chart with Ingester enabled using a New Kafka Cluster

|

||||||

|

|

||||||

|

To provision a new Kafka cluster along with jaeger-ingester:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger \

|

||||||

|

--set provisionDataStore.kafka=true \

|

||||||

|

--set ingester.enabled=true

|

||||||

|

```

|

||||||

|

|

||||||

|

## Installing the Chart with Ingester using an existing Kafka Cluster

|

||||||

|

|

||||||

|

You can use an exisiting Kafka cluster with jaeger too

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install jaeger jaegertracing/jaeger \

|

||||||

|

--set ingester.enabled=true \

|

||||||

|

--set storage.kafka.brokers={<BROKER1:PORT>,<BROKER2:PORT>} \

|

||||||

|

--set storage.kafka.topic=<TOPIC>

|

||||||

|

```

|

||||||

|

|

||||||

|

## Configuration

|

||||||

|

|

||||||

|

The following table lists the configurable parameters of the Jaeger chart and their default values.

|

||||||

|

|

||||||

|

| Parameter | Description | Default |

|

||||||

|

|-----------|-------------|---------|

|

||||||

|

| `<agent\|collector\|query\|ingester>.cmdlineParams` | Additional command line parameters | `nil` |

|

||||||

|

| `<component>.extraEnv` | Additional environment variables | [] |

|

||||||

|

| `<component>.nodeSelector` | Node selector | {} |

|

||||||

|

| `<component>.tolerations` | Node tolerations | [] |

|

||||||

|

| `<component>.affinity` | Affinity | {} |

|

||||||

|

| `<component>.podAnnotations` | Pod annotations | `nil` |

|

||||||

|

| `<component>.podSecurityContext` | Pod security context | {} |

|

||||||

|

| `<component>.securityContext` | Container security context | {} |

|

||||||

|

| `<component>.serviceAccount.create` | Create service account | `true` |

|

||||||

|

| `<component>.serviceAccount.name` | The name of the ServiceAccount to use. If not set and create is true, a name is generated using the fullname template | `nil` |

|

||||||

|

| `<component>.serviceMonitor.enabled` | Create serviceMonitor | `false` |

|

||||||

|

| `<component>.serviceMonitor.additionalLabels` | Add additional labels to serviceMonitor | {} |

|

||||||

|

| `agent.annotations` | Annotations for Agent | `nil` |

|

||||||

|

| `agent.dnsPolicy` | Configure DNS policy for agents | `ClusterFirst` |

|

||||||

|

| `agent.service.annotations` | Annotations for Agent SVC | `nil` |

|

||||||

|

| `agent.service.binaryPort` | jaeger.thrift over binary thrift | `6832` |

|

||||||

|

| `agent.service.compactPort` | jaeger.thrift over compact thrift| `6831` |

|

||||||

|

| `agent.image` | Image for Jaeger Agent | `jaegertracing/jaeger-agent` |

|

||||||

|

| `agent.imagePullSecrets` | Secret to pull the Image for Jaeger Agent | `[]` |

|

||||||

|

| `agent.pullPolicy` | Agent image pullPolicy | `IfNotPresent` |

|

||||||

|

| `agent.service.loadBalancerSourceRanges` | list of IP CIDRs allowed access to load balancer (if supported) | `[]` |

|

||||||

|

| `agent.service.annotations` | Annotations for Agent SVC | `nil` |

|

||||||

|

| `agent.service.binaryPort` | jaeger.thrift over binary thrift | `6832` |

|

||||||

|

| `agent.service.compactPort` | jaeger.thrift over compact thrift | `6831` |

|

||||||

|

| `agent.service.zipkinThriftPort` | zipkin.thrift over compact thrift | `5775` |

|

||||||

|

| `agent.extraConfigmapMounts` | Additional agent configMap mounts | `[]` |

|

||||||

|

| `agent.extraSecretMounts` | Additional agent secret mounts | `[]` |

|

||||||

|

| `agent.useHostNetwork` | Enable hostNetwork for agents | `false` |

|

||||||

|

| `agent.priorityClassName` | Priority class name for the agent pods | `nil` |

|

||||||

|

| `collector.autoscaling.enabled` | Enable horizontal pod autoscaling | `false` |

|

||||||

|

| `collector.autoscaling.minReplicas` | Minimum replicas | 2 |

|

||||||

|

| `collector.autoscaling.maxReplicas` | Maximum replicas | 10 |

|

||||||

|

| `collector.autoscaling.targetCPUUtilizationPercentage` | Target CPU utilization | 80 |

|

||||||

|

| `collector.autoscaling.targetMemoryUtilizationPercentage` | Target memory utilization | `nil` |

|

||||||

|

| `collector.image` | Image for jaeger collector | `jaegertracing/jaeger-collector` |

|

||||||

|

| `collector.imagePullSecrets` | Secret to pull the Image for Jaeger Collector | `[]` |

|

||||||

|

| `collector.pullPolicy` | Collector image pullPolicy | `IfNotPresent` |

|

||||||

|

| `collector.service.annotations` | Annotations for Collector SVC | `nil` |

|

||||||

|

| `collector.service.grpc.port` | Jaeger Agent port for model.proto | `14250` |

|

||||||

|

| `collector.service.http.port` | Client port for HTTP thrift | `14268` |

|

||||||

|

| `collector.service.loadBalancerSourceRanges` | list of IP CIDRs allowed access to load balancer (if supported) | `[]` |

|

||||||

|

| `collector.service.type` | Service type | `ClusterIP` |

|

||||||

|

| `collector.service.zipkin.port` | Zipkin port for JSON/thrift HTTP | `nil` |

|

||||||

|

| `collector.extraConfigmapMounts` | Additional collector configMap mounts | `[]` |

|

||||||

|

| `collector.extraSecretMounts` | Additional collector secret mounts | `[]` |

|

||||||

|

| `collector.samplingConfig` | [Sampling strategies json file](https://www.jaegertracing.io/docs/latest/sampling/#collector-sampling-configuration) | `nil` |

|

||||||

|

| `collector.priorityClassName` | Priority class name for the collector pods | `nil` |

|

||||||

|

| `ingester.enabled` | Enable ingester component, collectors will write to Kafka | `false` |

|

||||||

|

| `ingester.autoscaling.enabled` | Enable horizontal pod autoscaling | `false` |

|

||||||

|

| `ingester.autoscaling.minReplicas` | Minimum replicas | 2 |

|

||||||

|

| `ingester.autoscaling.maxReplicas` | Maximum replicas | 10 |

|

||||||

|

| `ingester.autoscaling.targetCPUUtilizationPercentage` | Target CPU utilization | 80 |

|

||||||

|

| `ingester.autoscaling.targetMemoryUtilizationPercentage` | Target memory utilization | `nil` |

|

||||||

|

| `ingester.service.annotations` | Annotations for Ingester SVC | `nil` |

|

||||||

|

| `ingester.image` | Image for jaeger Ingester | `jaegertracing/jaeger-ingester` |

|

||||||

|

| `ingester.imagePullSecrets` | Secret to pull the Image for Jaeger Ingester | `[]` |

|

||||||

|

| `ingester.pullPolicy` | Ingester image pullPolicy | `IfNotPresent` |

|

||||||

|

| `ingester.service.annotations` | Annotations for Ingester SVC | `nil` |

|

||||||

|

| `ingester.service.loadBalancerSourceRanges` | list of IP CIDRs allowed access to load balancer (if supported) | `[]` |

|

||||||

|

| `ingester.service.type` | Service type | `ClusterIP` |

|

||||||

|

| `ingester.extraConfigmapMounts` | Additional Ingester configMap mounts | `[]` |

|

||||||

|

| `ingester.extraSecretMounts` | Additional Ingester secret mounts | `[]` |

|

||||||

|

| `fullnameOverride` | Override full name | `nil` |

|

||||||

|

| `hotrod.enabled` | Enables the Hotrod demo app | `false` |

|

||||||

|

| `hotrod.service.loadBalancerSourceRanges` | list of IP CIDRs allowed access to load balancer (if supported) | `[]` |

|

||||||

|

| `hotrod.image.pullSecrets` | Secret to pull the Image for the Hotrod demo app | `[]` |

|

||||||

|

| `nameOverride` | Override name| `nil` |

|

||||||

|

| `provisionDataStore.cassandra` | Provision Cassandra Data Store| `true` |

|

||||||

|

| `provisionDataStore.elasticsearch` | Provision Elasticsearch Data Store | `false` |

|

||||||

|

| `provisionDataStore.kafka` | Provision Kafka Data Store | `false` |

|

||||||

|

| `query.agentSidecar.enabled` | Enable agent sidecare for query deployment | `true` |

|

||||||

|

| `query.config` | [UI Config json file](https://www.jaegertracing.io/docs/latest/frontend-ui/) | `nil` |

|

||||||

|

| `query.service.annotations` | Annotations for Query SVC | `nil` |

|

||||||

|

| `query.image` | Image for Jaeger Query UI | `jaegertracing/jaeger-query` |

|

||||||

|

| `query.imagePullSecrets` | Secret to pull the Image for Jaeger Query UI | `[]` |

|

||||||

|

| `query.ingress.enabled` | Allow external traffic access | `false` |

|

||||||

|

| `query.ingress.annotations` | Configure annotations for Ingress | `{}` |

|

||||||

|

| `query.ingress.hosts` | Configure host for Ingress | `nil` |

|

||||||

|

| `query.ingress.tls` | Configure tls for Ingress | `nil` |

|

||||||

|

| `query.pullPolicy` | Query UI image pullPolicy | `IfNotPresent` |

|

||||||

|

| `query.service.loadBalancerSourceRanges` | list of IP CIDRs allowed access to load balancer (if supported) | `[]` |

|

||||||

|

| `query.service.nodePort` | Specific node port to use when type is NodePort | `nil` |

|

||||||

|

| `query.service.port` | External accessible port | `80` |

|

||||||

|

| `query.service.type` | Service type | `ClusterIP` |

|

||||||

|

| `query.basePath` | Base path of Query UI, used for ingress as well (if it is enabled) | `/` |

|

||||||

|

| `query.extraConfigmapMounts` | Additional query configMap mounts | `[]` |

|

||||||

|

| `query.priorityClassName` | Priority class name for the Query UI pods | `nil` |

|

||||||

|

| `schema.annotations` | Annotations for the schema job| `nil` |

|

||||||

|

| `schema.extraConfigmapMounts` | Additional cassandra schema job configMap mounts | `[]` |

|

||||||

|

| `schema.image` | Image to setup cassandra schema | `jaegertracing/jaeger-cassandra-schema` |

|

||||||

|

| `schema.imagePullSecrets` | Secret to pull the Image for the Cassandra schema setup job | `[]` |

|

||||||

|

| `schema.pullPolicy` | Schema image pullPolicy | `IfNotPresent` |

|

||||||

|

| `schema.activeDeadlineSeconds` | Deadline in seconds for cassandra schema creation job to complete | `120` |

|

||||||

|

| `schema.keyspace` | Set explicit keyspace name | `nil` |

|

||||||

|

| `spark.enabled` | Enables the dependencies job| `false` |

|

||||||

|

| `spark.image` | Image for the dependencies job| `jaegertracing/spark-dependencies` |

|

||||||

|

| `spark.imagePullSecrets` | Secret to pull the Image for the Spark dependencies job | `[]` |

|

||||||

|

| `spark.pullPolicy` | Image pull policy of the deps image | `Always` |

|

||||||

|

| `spark.schedule` | Schedule of the cron job | `"49 23 * * *"` |

|

||||||

|

| `spark.successfulJobsHistoryLimit` | Cron job successfulJobsHistoryLimit | `5` |

|

||||||

|

| `spark.failedJobsHistoryLimit` | Cron job failedJobsHistoryLimit | `5` |

|

||||||

|

| `spark.tag` | Tag of the dependencies job image | `latest` |

|

||||||

|

| `spark.extraConfigmapMounts` | Additional spark configMap mounts | `[]` |

|

||||||

|

| `spark.extraSecretMounts` | Additional spark secret mounts | `[]` |

|

||||||

|

| `esIndexCleaner.enabled` | Enables the ElasticSearch indices cleanup job| `false` |

|

||||||

|

| `esIndexCleaner.image` | Image for the ElasticSearch indices cleanup job| `jaegertracing/jaeger-es-index-cleaner` |

|

||||||

|

| `esIndexCleaner.imagePullSecrets` | Secret to pull the Image for the ElasticSearch indices cleanup job | `[]` |

|

||||||

|

| `esIndexCleaner.pullPolicy` | Image pull policy of the ES cleanup image | `Always` |

|

||||||

|

| `esIndexCleaner.numberOfDays` | ElasticSearch indices older than this number (Number of days) would be deleted by the CronJob | `7`

|

||||||

|

| `esIndexCleaner.schedule` | Schedule of the cron job | `"55 23 * * *"` |

|

||||||

|

| `esIndexCleaner.successfulJobsHistoryLimit` | successfulJobsHistoryLimit for ElasticSearch indices cleanup CronJob | `5` |

|

||||||

|

| `esIndexCleaner.failedJobsHistoryLimit` | failedJobsHistoryLimit for ElasticSearch indices cleanup CronJob | `5` |

|

||||||

|

| `esIndexCleaner.tag` | Tag of the dependencies job image | `latest` |

|

||||||

|

| `esIndexCleaner.extraConfigmapMounts` | Additional esIndexCleaner configMap mounts | `[]` |

|

||||||

|

| `esIndexCleaner.extraSecretMounts` | Additional esIndexCleaner secret mounts | `[]` |

|

||||||

|

| `storage.cassandra.env` | Extra cassandra related env vars to be configured on components that talk to cassandra | `cassandra` |

|

||||||

|

| `storage.cassandra.cmdlineParams` | Extra cassandra related command line options to be configured on components that talk to cassandra | `cassandra` |

|

||||||

|

| `storage.cassandra.existingSecret` | Name of existing password secret object (for password authentication | `nil`

|

||||||

|

| `storage.cassandra.host` | Provisioned cassandra host | `cassandra` |

|

||||||

|

| `storage.cassandra.keyspace` | Schema name for cassandra | `jaeger_v1_test` |

|

||||||

|

| `storage.cassandra.password` | Provisioned cassandra password (ignored if storage.cassandra.existingSecret set) | `password` |

|

||||||

|

| `storage.cassandra.port` | Provisioned cassandra port | `9042` |

|

||||||

|

| `storage.cassandra.tls.enabled` | Provisioned cassandra TLS connection enabled | `false` |

|

||||||

|

| `storage.cassandra.tls.secretName` | Provisioned cassandra TLS connection existing secret name (possible keys in secret: `ca-cert.pem`, `client-key.pem`, `client-cert.pem`, `cqlshrc`, `commonName`) | `` |

|

||||||

|

| `storage.cassandra.usePassword` | Use password | `true` |

|

||||||

|

| `storage.cassandra.user` | Provisioned cassandra username | `user` |

|

||||||

|

| `storage.elasticsearch.env` | Extra ES related env vars to be configured on components that talk to ES | `nil` |

|

||||||

|

| `storage.elasticsearch.cmdlineParams` | Extra ES related command line options to be configured on components that talk to ES | `nil` |

|

||||||

|

| `storage.elasticsearch.existingSecret` | Name of existing password secret object (for password authentication | `nil` |

|

||||||

|

| `storage.elasticsearch.existingSecretKey` | Key of the declared password secret | `password` |

|

||||||

|

| `storage.elasticsearch.host` | Provisioned elasticsearch host| `elasticsearch` |

|

||||||

|

| `storage.elasticsearch.password` | Provisioned elasticsearch password (ignored if storage.elasticsearch.existingSecret set | `changeme` |

|

||||||

|

| `storage.elasticsearch.port` | Provisioned elasticsearch port| `9200` |

|

||||||

|

| `storage.elasticsearch.scheme` | Provisioned elasticsearch scheme | `http` |

|

||||||

|

| `storage.elasticsearch.usePassword` | Use password | `true` |

|

||||||

|

| `storage.elasticsearch.user` | Provisioned elasticsearch user| `elastic` |

|

||||||

|

| `storage.elasticsearch.indexPrefix` | Index Prefix for elasticsearch | `nil` |

|

||||||

|

| `storage.elasticsearch.nodesWanOnly` | Only access specified es host | `false` |

|

||||||

|

| `storage.kafka.authentication` | Authentication type used to authenticate with kafka cluster. e.g. none, kerberos, tls | `none` |

|

||||||

|

| `storage.kafka.brokers` | Broker List for Kafka with port | `kafka:9092` |

|

||||||

|

| `storage.kafka.topic` | Topic name for Kafka | `jaeger_v1_test` |

|

||||||

|

| `storage.type` | Storage type (ES or Cassandra)| `cassandra` |

|

||||||

|

| `tag` | Image tag/version | `1.18.0` |

|

||||||

|

|

||||||

|

For more information about some of the tunable parameters that Cassandra provides, please visit the helm chart for [cassandra](https://github.com/kubernetes/charts/tree/master/incubator/cassandra) and the official [website](http://cassandra.apache.org/) at apache.org.

|

||||||

|

|

||||||

|

For more information about some of the tunable parameters that Jaeger provides, please visit the official [Jaeger repo](https://github.com/uber/jaeger) at GitHub.com.

|

||||||

|

|

||||||

|

### Pending enhancements

|

||||||

|

|

||||||

|

- [ ] Sidecar deployment support

|

||||||

|

|

@ -0,0 +1,17 @@

|

||||||

|

# Patterns to ignore when building packages.

|

||||||

|

# This supports shell glob matching, relative path matching, and

|

||||||

|

# negation (prefixed with !). Only one pattern per line.

|

||||||

|

.DS_Store

|

||||||

|

# Common VCS dirs

|

||||||

|

.git/

|

||||||

|

.gitignore

|

||||||

|

# Common backup files

|

||||||

|

*.swp

|

||||||

|

*.bak

|

||||||

|

*.tmp

|

||||||

|

*~

|

||||||

|

# Various IDEs

|

||||||

|

.project

|

||||||

|

.idea/

|

||||||

|

*.tmproj

|

||||||

|

OWNERS

|

||||||

|

|

@ -0,0 +1,18 @@

|

||||||

|

apiVersion: v1

|

||||||

|

appVersion: 3.11.6

|

||||||

|

description: Apache Cassandra is a free and open-source distributed database management

|

||||||

|

system designed to handle large amounts of data across many commodity servers, providing

|

||||||

|

high availability with no single point of failure.

|

||||||

|

home: http://cassandra.apache.org

|

||||||

|

icon: https://upload.wikimedia.org/wikipedia/commons/thumb/5/5e/Cassandra_logo.svg/330px-Cassandra_logo.svg.png

|

||||||

|

keywords:

|

||||||

|

- cassandra

|

||||||

|

- database

|

||||||

|

- nosql

|

||||||

|

maintainers:

|

||||||

|

- email: goonohc@gmail.com

|

||||||

|

name: KongZ

|

||||||

|

- email: maor.friedman@redhat.com

|

||||||

|

name: maorfr

|

||||||

|

name: cassandra

|

||||||

|

version: 0.15.2

|

||||||

218

rds/base/charts/all/charts/jaeger/charts/cassandra/README.md

Normal file

218

rds/base/charts/all/charts/jaeger/charts/cassandra/README.md

Normal file

|

|

@ -0,0 +1,218 @@

|

||||||

|

# Cassandra

|

||||||

|

A Cassandra Chart for Kubernetes

|

||||||

|

|

||||||

|

## Install Chart

|

||||||

|

To install the Cassandra Chart into your Kubernetes cluster (This Chart requires persistent volume by default, you may need to create a storage class before install chart. To create storage class, see [Persist data](#persist_data) section)

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install --namespace "cassandra" -n "cassandra" incubator/cassandra

|

||||||

|

```

|

||||||

|

|

||||||

|

After installation succeeds, you can get a status of Chart

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm status "cassandra"

|

||||||

|

```

|

||||||

|

|

||||||

|

If you want to delete your Chart, use this command

|

||||||

|

```bash

|

||||||

|

helm delete --purge "cassandra"

|

||||||

|

```

|

||||||

|

|

||||||

|

## Upgrading

|

||||||

|

|

||||||

|

To upgrade your Cassandra release, simply run

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm upgrade "cassandra" incubator/cassandra

|

||||||

|

```

|

||||||

|

|

||||||

|

### 0.12.0

|

||||||

|

|

||||||

|

This version fixes https://github.com/helm/charts/issues/7803 by removing mutable labels in `spec.VolumeClaimTemplate.metadata.labels` so that it is upgradable.

|

||||||

|

|

||||||

|

Until this version, in order to upgrade, you have to delete the Cassandra StatefulSet before upgrading:

|

||||||

|

```bash

|

||||||

|

$ kubectl delete statefulset --cascade=false my-cassandra-release

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

## Persist data

|

||||||

|

You need to create `StorageClass` before able to persist data in persistent volume.

|

||||||

|

To create a `StorageClass` on Google Cloud, run the following

|

||||||

|

|

||||||

|

```bash

|

||||||

|

kubectl create -f sample/create-storage-gce.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

And set the following values in `values.yaml`

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

persistence:

|

||||||

|

enabled: true

|

||||||

|

```

|

||||||

|

|

||||||

|

If you want to create a `StorageClass` on other platform, please see documentation here [https://kubernetes.io/docs/user-guide/persistent-volumes/](https://kubernetes.io/docs/user-guide/persistent-volumes/)

|

||||||

|

|

||||||

|

When running a cluster without persistence, the termination of a pod will first initiate a decommissioning of that pod.

|

||||||

|

Depending on the amount of data stored inside the cluster this may take a while. In order to complete a graceful

|

||||||

|

termination, pods need to get more time for it. Set the following values in `values.yaml`:

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

podSettings:

|

||||||

|

terminationGracePeriodSeconds: 1800

|

||||||

|

```

|

||||||

|

|

||||||

|

## Install Chart with specific cluster size

|

||||||

|

By default, this Chart will create a cassandra with 3 nodes. If you want to change the cluster size during installation, you can use `--set config.cluster_size={value}` argument. Or edit `values.yaml`

|

||||||

|

|

||||||

|

For example:

|

||||||

|

Set cluster size to 5

|

||||||

|

|

||||||

|

```bash

|

||||||

|

helm install --namespace "cassandra" -n "cassandra" --set config.cluster_size=5 incubator/cassandra/

|

||||||

|

```

|

||||||

|

|

||||||

|

## Install Chart with specific resource size

|

||||||

|

By default, this Chart will create a cassandra with CPU 2 vCPU and 4Gi of memory which is suitable for development environment.

|

||||||

|

If you want to use this Chart for production, I would recommend to update the CPU to 4 vCPU and 16Gi. Also increase size of `max_heap_size` and `heap_new_size`.

|

||||||

|

To update the settings, edit `values.yaml`

|

||||||

|

|

||||||

|

## Install Chart with specific node

|

||||||

|

Sometime you may need to deploy your cassandra to specific nodes to allocate resources. You can use node selector by edit `nodes.enabled=true` in `values.yaml`

|

||||||

|

For example, you have 6 vms in node pools and you want to deploy cassandra to node which labeled as `cloud.google.com/gke-nodepool: pool-db`

|

||||||

|

|

||||||

|

Set the following values in `values.yaml`

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

nodes:

|

||||||

|

enabled: true

|

||||||

|

selector:

|

||||||

|

nodeSelector:

|

||||||

|

cloud.google.com/gke-nodepool: pool-db

|

||||||

|

```

|

||||||

|

|

||||||

|

## Configuration

|

||||||

|

|

||||||

|

The following table lists the configurable parameters of the Cassandra chart and their default values.

|

||||||

|

|

||||||

|

| Parameter | Description | Default |

|

||||||

|

| ----------------------- | --------------------------------------------- | ---------------------------------------------------------- |

|

||||||

|

| `image.repo` | `cassandra` image repository | `cassandra` |

|

||||||

|

| `image.tag` | `cassandra` image tag | `3.11.5` |

|

||||||

|

| `image.pullPolicy` | Image pull policy | `Always` if `imageTag` is `latest`, else `IfNotPresent` |

|

||||||

|

| `image.pullSecrets` | Image pull secrets | `nil` |

|

||||||

|

| `config.cluster_domain` | The name of the cluster domain. | `cluster.local` |

|

||||||

|

| `config.cluster_name` | The name of the cluster. | `cassandra` |

|

||||||

|

| `config.cluster_size` | The number of nodes in the cluster. | `3` |

|

||||||

|

| `config.seed_size` | The number of seed nodes used to bootstrap new clients joining the cluster. | `2` |

|

||||||

|

| `config.seeds` | The comma-separated list of seed nodes. | Automatically generated according to `.Release.Name` and `config.seed_size` |

|

||||||

|

| `config.num_tokens` | Initdb Arguments | `256` |

|

||||||

|

| `config.dc_name` | Initdb Arguments | `DC1` |

|

||||||

|

| `config.rack_name` | Initdb Arguments | `RAC1` |

|

||||||

|

| `config.endpoint_snitch` | Initdb Arguments | `SimpleSnitch` |

|

||||||

|

| `config.max_heap_size` | Initdb Arguments | `2048M` |

|

||||||

|

| `config.heap_new_size` | Initdb Arguments | `512M` |

|

||||||

|

| `config.ports.cql` | Initdb Arguments | `9042` |

|

||||||

|

| `config.ports.thrift` | Initdb Arguments | `9160` |

|

||||||

|

| `config.ports.agent` | The port of the JVM Agent (if any) | `nil` |

|

||||||

|

| `config.start_rpc` | Initdb Arguments | `false` |

|

||||||

|

| `configOverrides` | Overrides config files in /etc/cassandra dir | `{}` |

|

||||||

|

| `commandOverrides` | Overrides default docker command | `[]` |

|

||||||

|

| `argsOverrides` | Overrides default docker args | `[]` |

|

||||||

|

| `env` | Custom env variables | `{}` |

|

||||||

|

| `schedulerName` | Name of k8s scheduler (other than the default) | `nil` |

|

||||||

|

| `persistence.enabled` | Use a PVC to persist data | `true` |

|

||||||

|

| `persistence.storageClass` | Storage class of backing PVC | `nil` (uses alpha storage class annotation) |

|

||||||

|

| `persistence.accessMode` | Use volume as ReadOnly or ReadWrite | `ReadWriteOnce` |

|

||||||

|

| `persistence.size` | Size of data volume | `10Gi` |

|

||||||

|

| `resources` | CPU/Memory resource requests/limits | Memory: `4Gi`, CPU: `2` |

|

||||||

|

| `service.type` | k8s service type exposing ports, e.g. `NodePort`| `ClusterIP` |

|

||||||

|

| `service.annotations` | Annotations to apply to cassandra service | `""` |

|

||||||

|

| `podManagementPolicy` | podManagementPolicy of the StatefulSet | `OrderedReady` |

|

||||||

|

| `podDisruptionBudget` | Pod distruption budget | `{}` |

|

||||||

|

| `podAnnotations` | pod annotations for the StatefulSet | `{}` |

|

||||||

|

| `updateStrategy.type` | UpdateStrategy of the StatefulSet | `OnDelete` |

|

||||||

|

| `livenessProbe.initialDelaySeconds` | Delay before liveness probe is initiated | `90` |

|

||||||

|

| `livenessProbe.periodSeconds` | How often to perform the probe | `30` |

|

||||||

|

| `livenessProbe.timeoutSeconds` | When the probe times out | `5` |

|

||||||

|

| `livenessProbe.successThreshold` | Minimum consecutive successes for the probe to be considered successful after having failed. | `1` |

|

||||||

|